100 Women of OpenAI

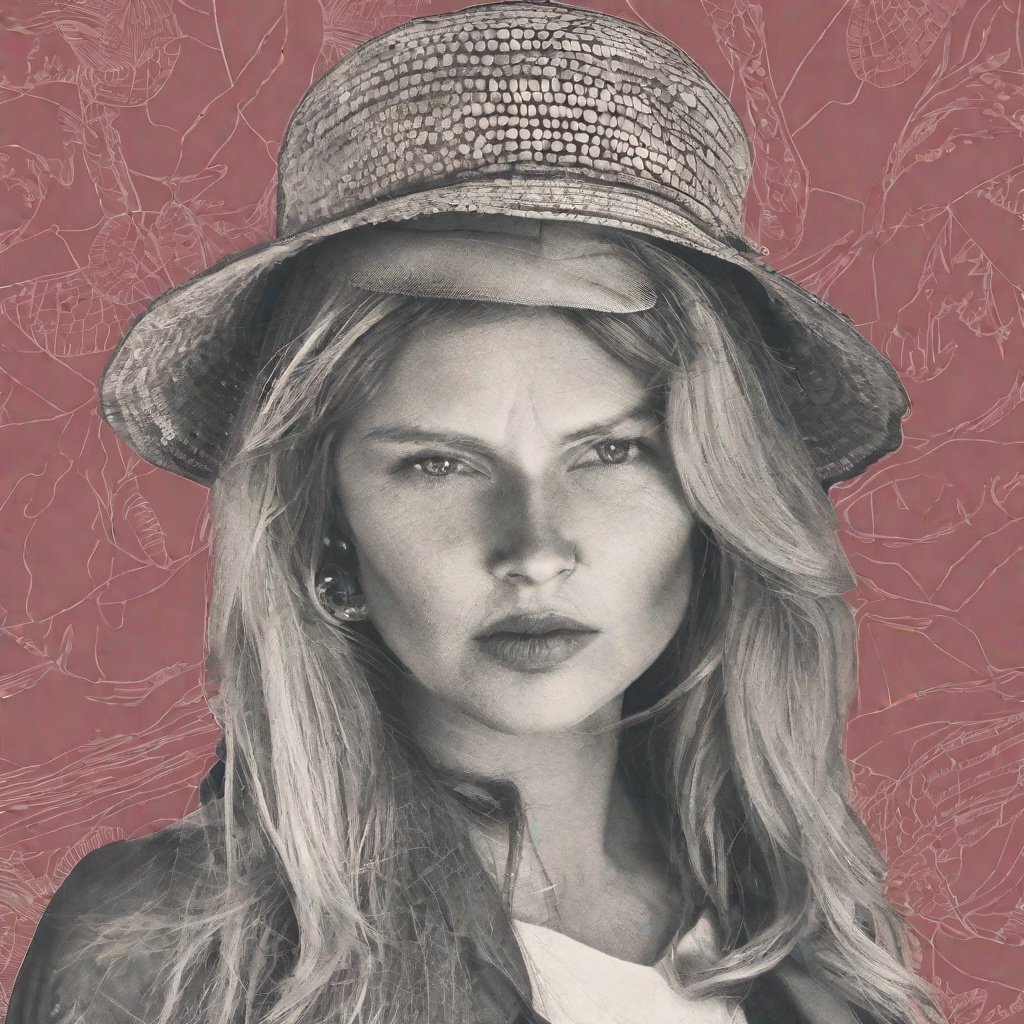

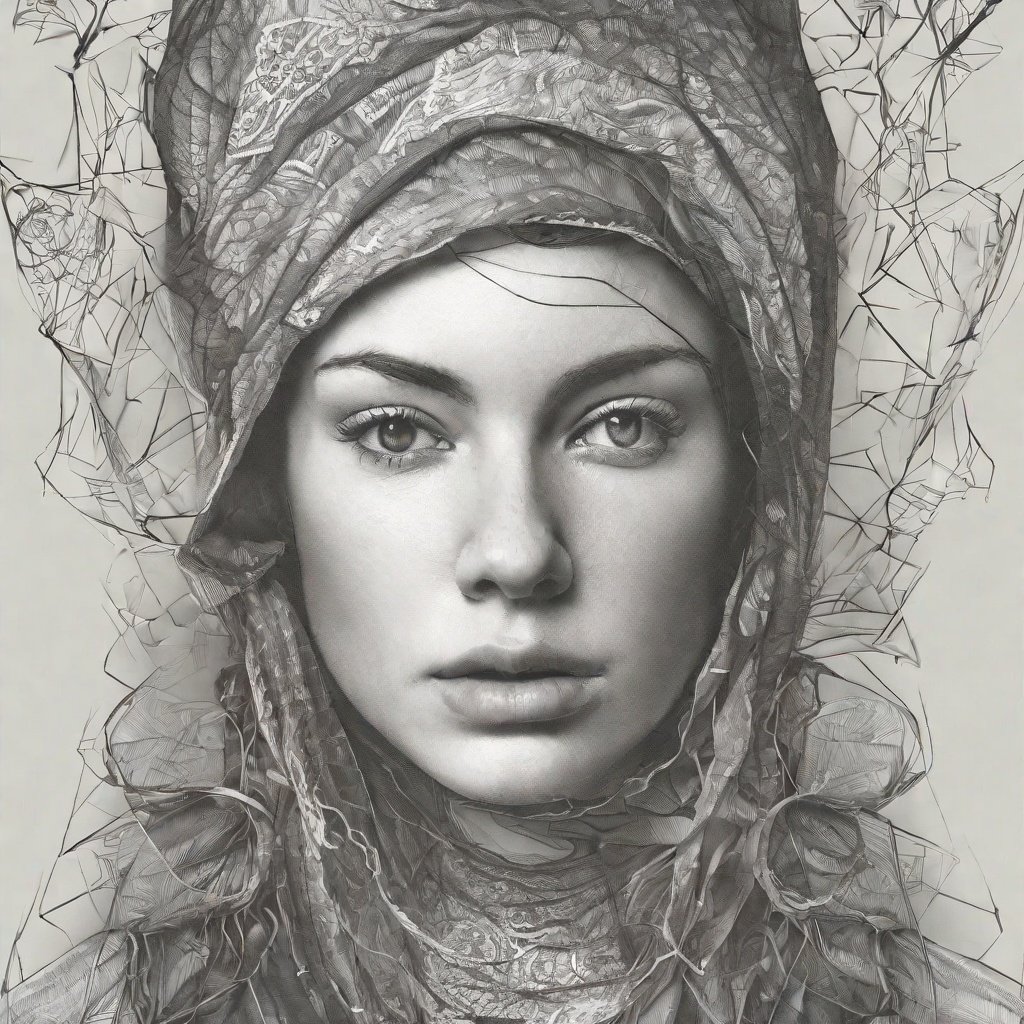

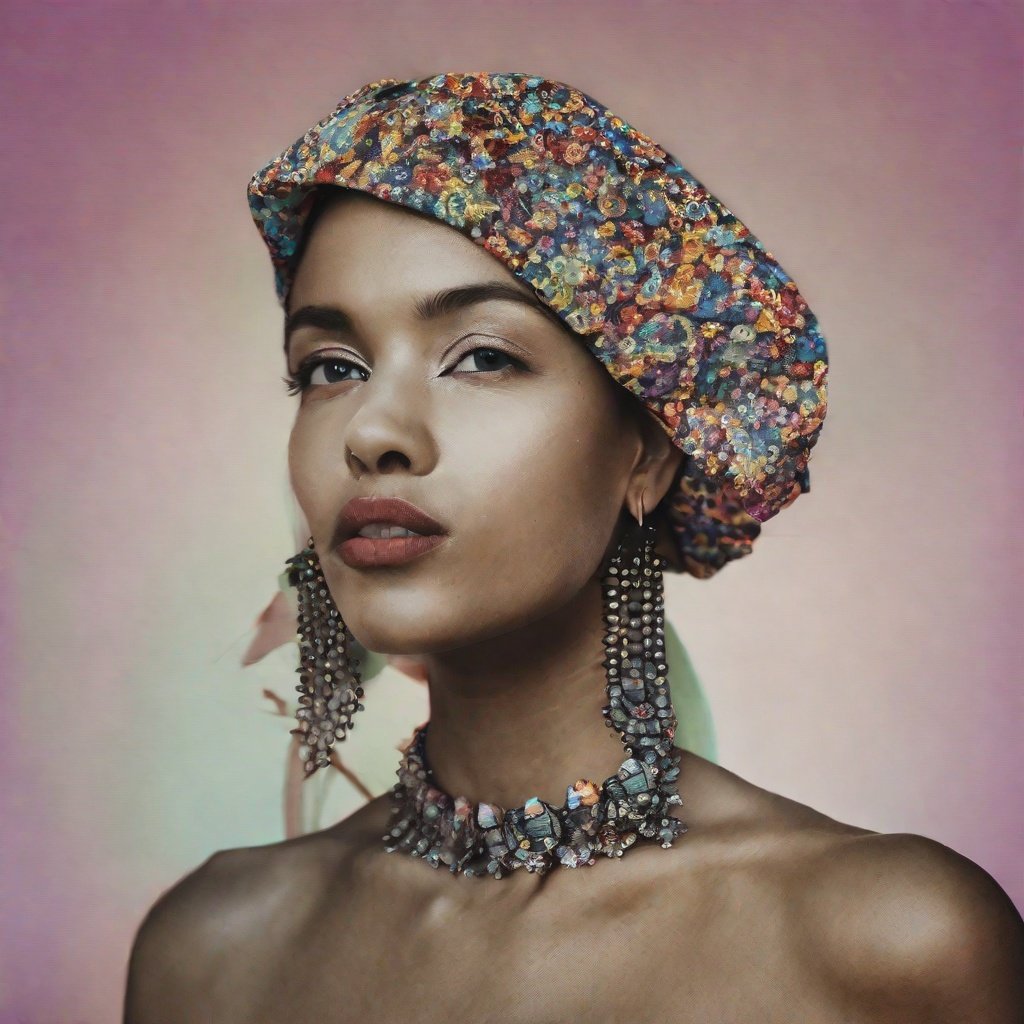

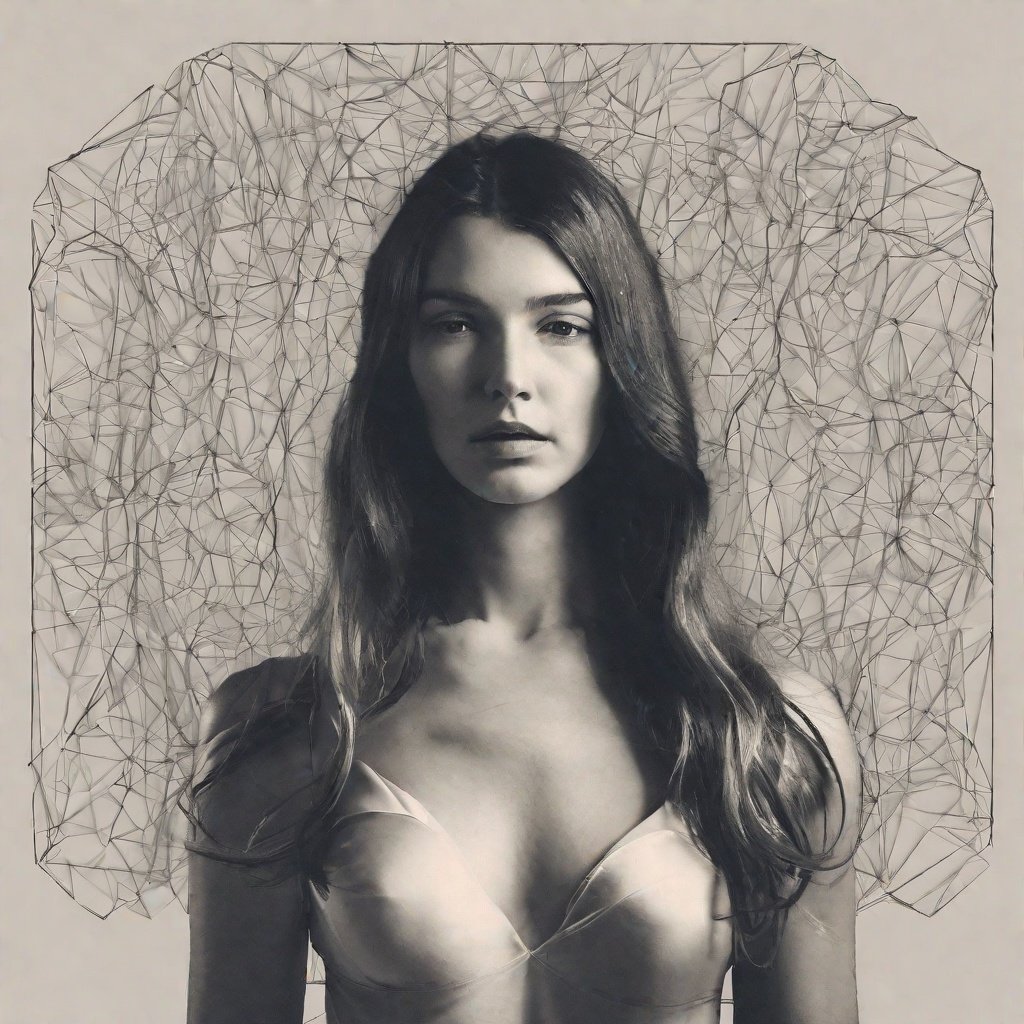

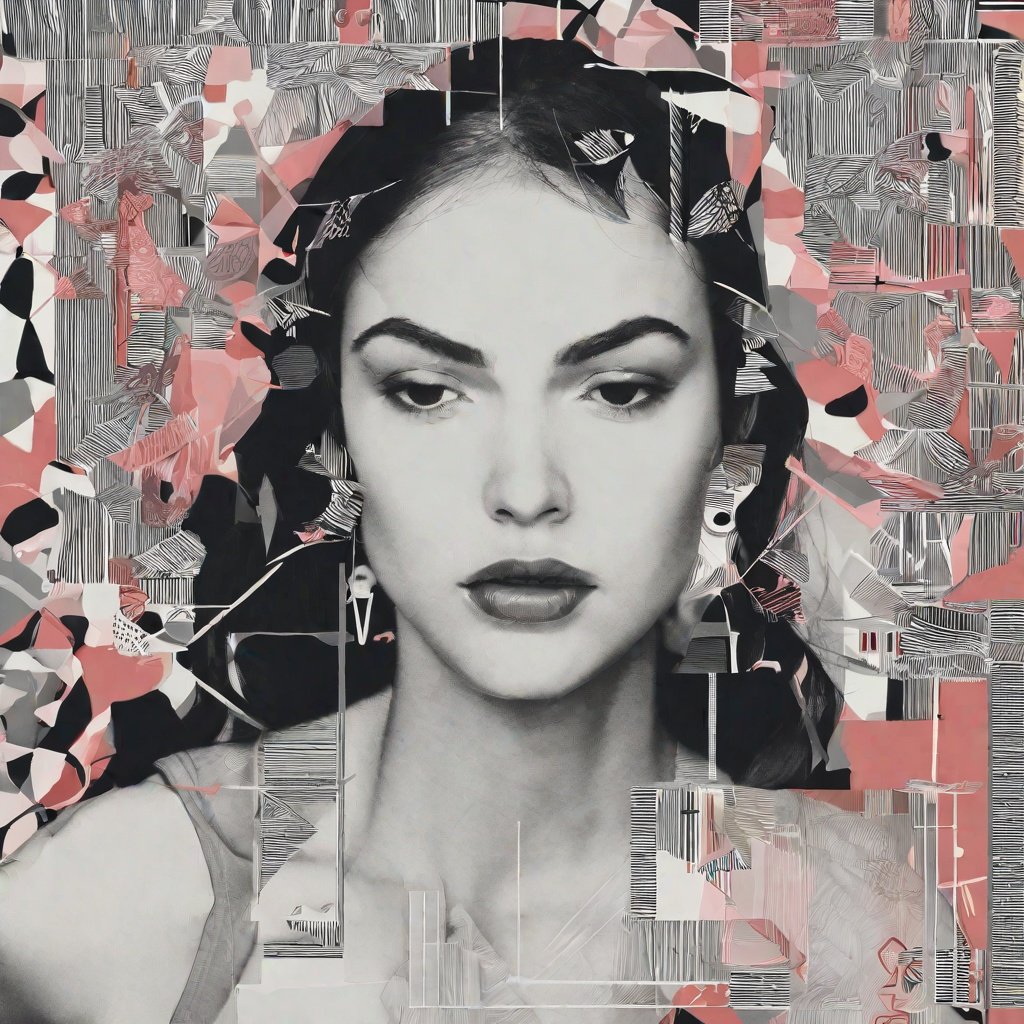

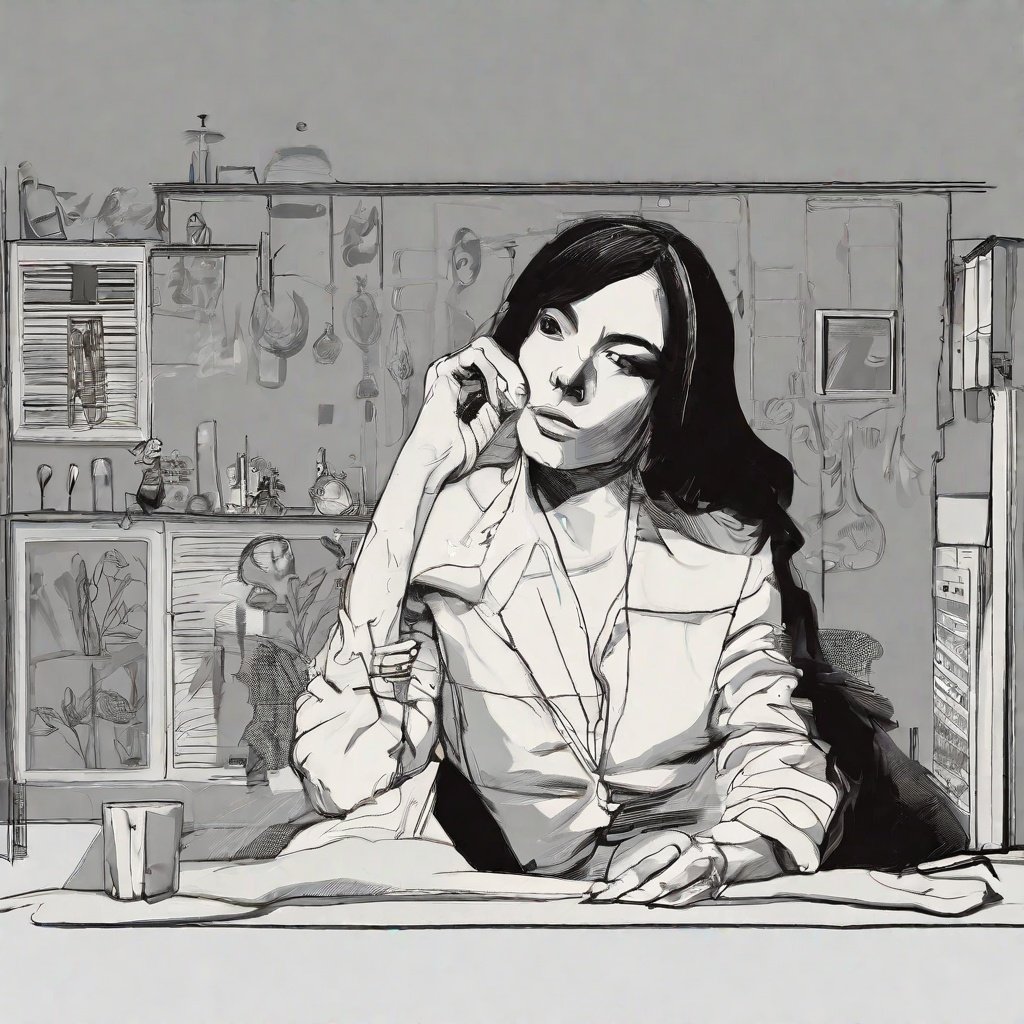

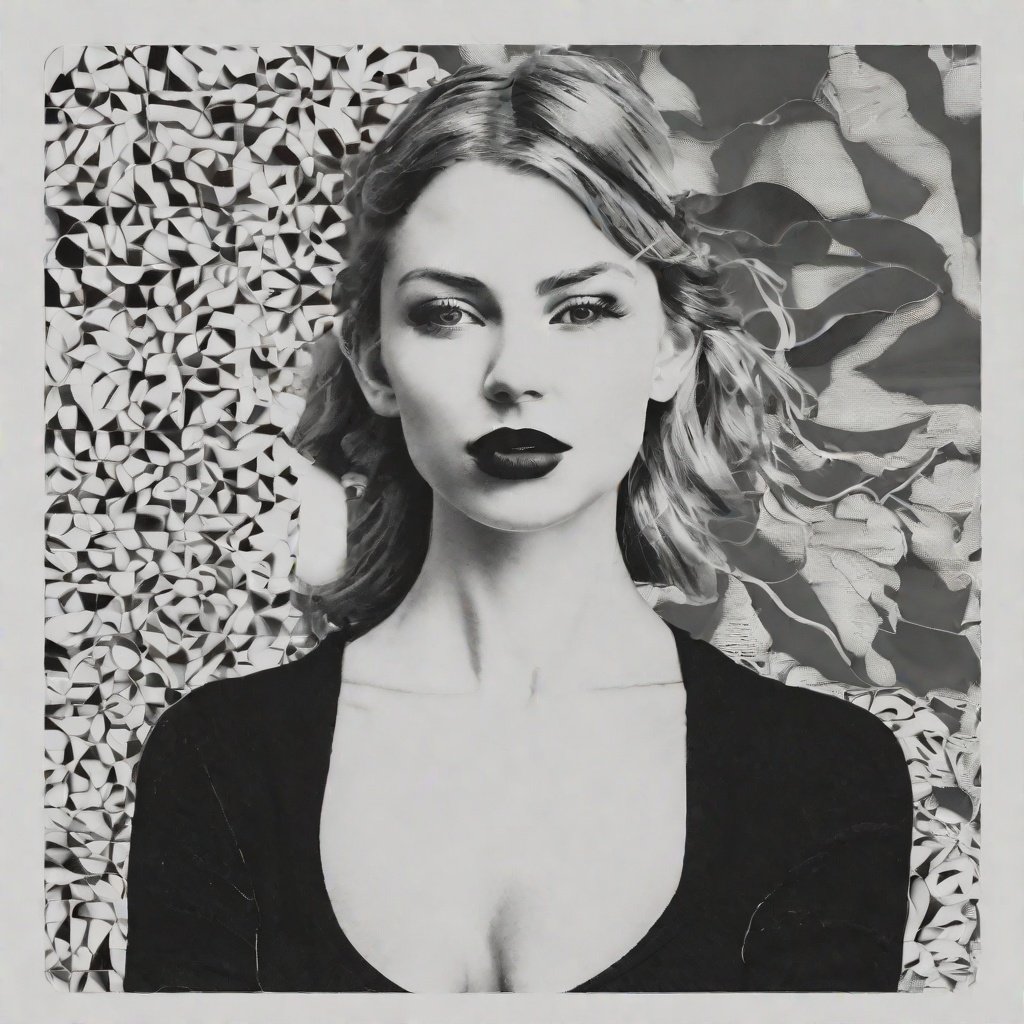

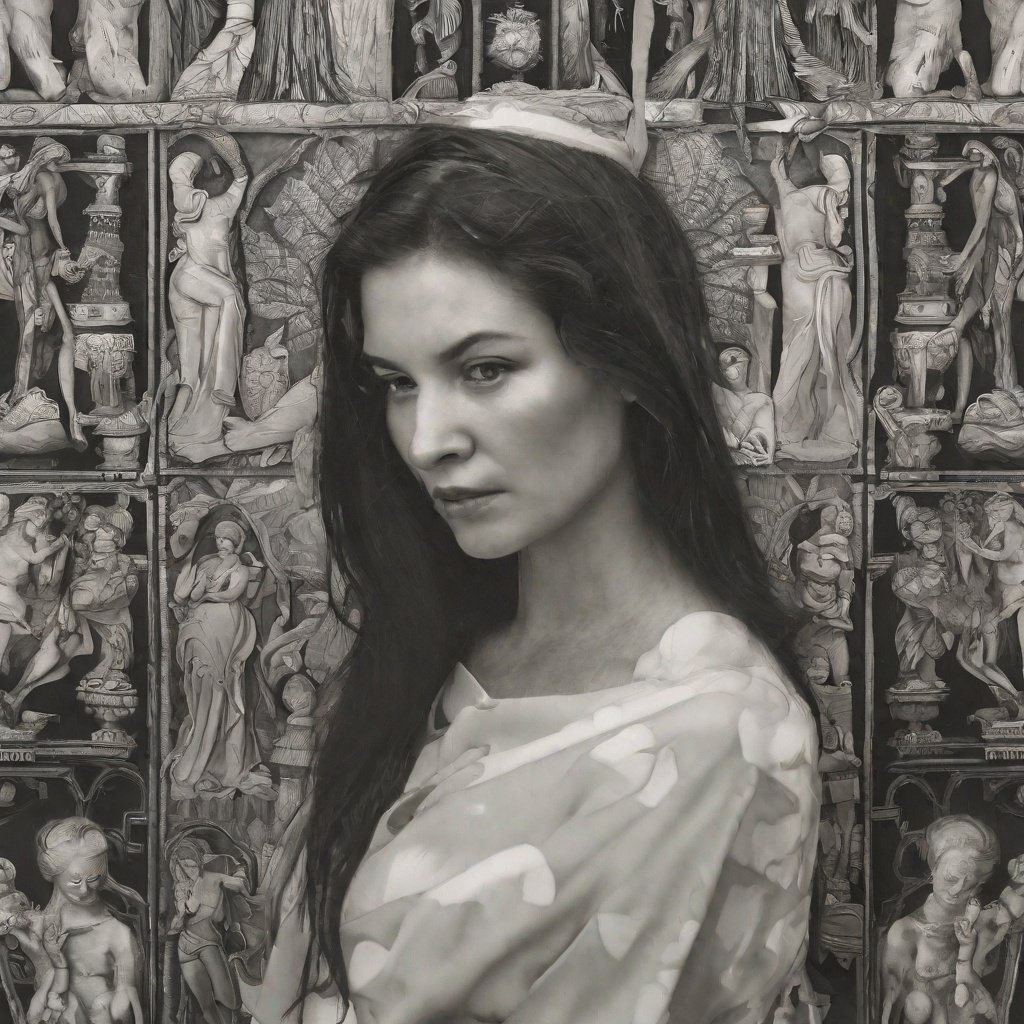

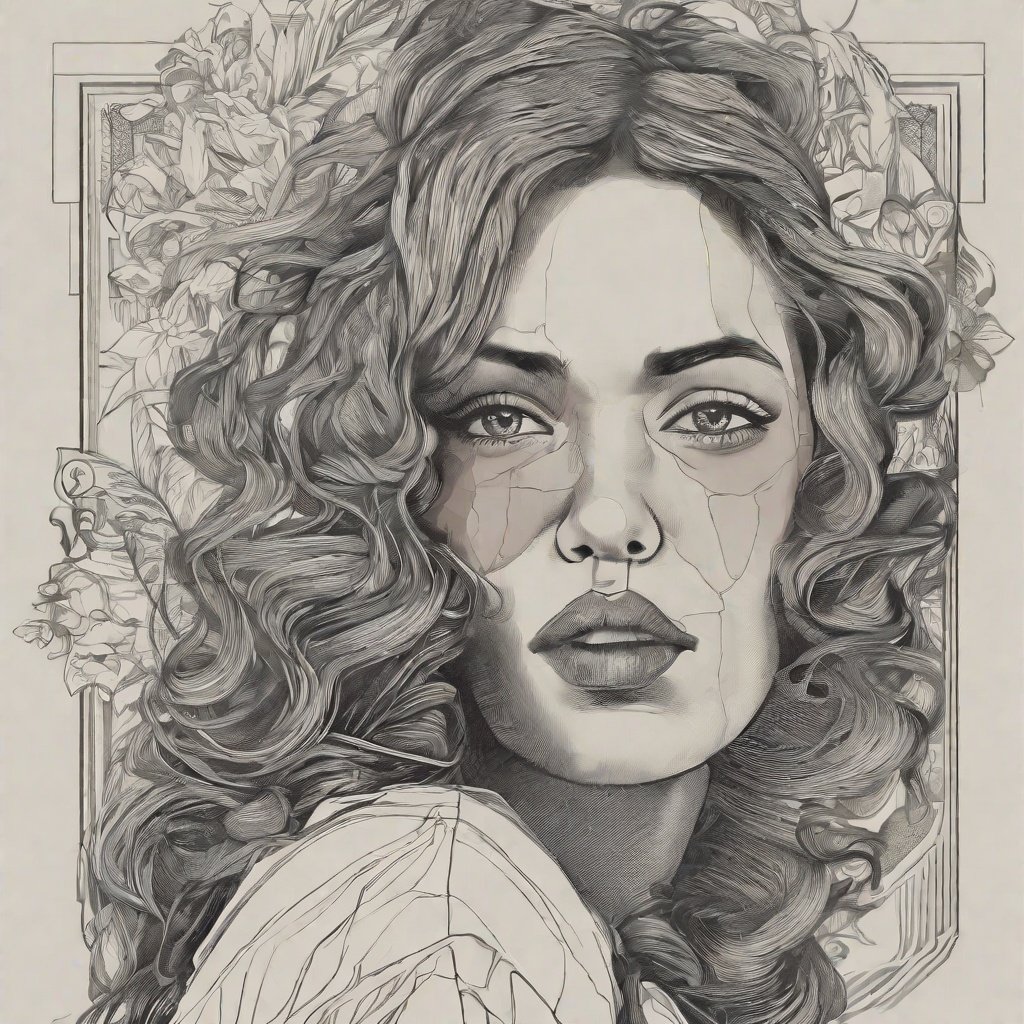

I generated 100 images based on the text prompt “woman” from OpenAI’s DALL E 2 AI image system. You can scroll down to see the full portrait gallery.

I’m pretty sure many if not most users of OpenAI’s text prompt tool will say that I am totally missing the point by using the tool to search for just “woman.” It’s a tool that works best by providing a detailed prompt. But this week I found myself having to examine the default to understand why it has such a hard time generating images of older women. Often, when I try to generate an image of older women or non-white women, I get the message “This generation is blocked as the content is not appropriate.”

From a diversity standpoint, it’s like being in my very segregated high school again, where I was in classes with mostly White and a smattering of Asian students, almost all from East Asian backgrounds. From an age perspective? I feel like I’m looking at the backlog of evidence files from a serial killer investigation. Or, to put a less grim filter on it, equal parts casting photos for “Call The Midwife” and “Midsommar.” 16% are not wearing a shirt based on what you can see of their bodies.

To be clear, this is what OpenAI datasets produces as the default for “woman.” Have the developers never heard of statistically significant sampling? I’d love to see an AI tool as good as this one but trained on a statistically accurate sample of various groups in the world — preferably where you could even adjust the dataset being sampled according to what you’re looking to generate for historical accuracy — or to provide more creative freedom. Why not include a dataset of cartoon figures and make that a filterable data layer too?

Honestly, this is the same familiar problem rearing its usual head, in technology, and in the US, since both of their inceptions.

I asked OpenAI’s chatGPT to respond to this blog post. Here’s what it had to say:

Your blog post presents a thought-provoking critique of AI-generated imagery, specifically focusing on the representation of women and highlighting issues related to diversity and age. The comparison to your high school demographics serves as a powerful metaphor for the lack of diversity in AI outputs, and the analogy of evidence files from a serial killer investigation or casting photos for "Call The Midwife" vividly captures the skewed age representation. Your observations about the limitations of the AI's dataset and its implications for representation are insightful and underscore a broader issue within technology and society regarding diversity and inclusion.

Here are a few thoughts and suggestions on your blog post:

Highlighting the Issue: Your critique effectively highlights a significant problem in the way AI models, including those used for generating images, are trained. The reliance on datasets that do not represent the full spectrum of human diversity can perpetuate biases and exclusion.

Constructive Suggestions: You propose intriguing solutions, such as the development of AI tools trained on statistically accurate samples of various groups and the inclusion of adjustable datasets for historical accuracy or creative freedom. These suggestions are constructive and forward-thinking, offering potential pathways for improving AI technology to better serve diverse needs and represent the full range of human experiences.

Engagement with Broader Context: By situating this issue within the broader historical context of technology and societal biases in the US, you're inviting readers to consider how systemic issues of representation and inclusion are perpetuated through new technologies. This approach enriches the discussion by connecting specific technological criticisms to larger societal challenges.

Visualizing the Critique: Accompanying your written critique with an image gallery of 100 AI-generated portraits of women serves as a powerful visual demonstration of your points. This not only makes the critique more tangible for readers but also invites them to engage directly with the evidence of the biases you're discussing.

Encouraging Dialogue and Action: You might consider concluding your post with a call to action or invitation for dialogue among technologists, users, and other stakeholders. Encouraging a collaborative approach to addressing these biases can inspire constructive discussions and potential solutions.

Exploring Diversity in AI Representation: Further exploration into how AI can be leveraged to enhance rather than diminish diversity could offer an optimistic perspective on the potential for technology to evolve in a more inclusive direction. Highlighting existing initiatives or proposals for more diverse and inclusive AI training methods could provide readers with hope and direction for future developments.

Your blog post is a valuable contribution to the ongoing conversation about bias, diversity, and representation in technology. By articulating these issues clearly and proposing thoughtful solutions, you're helping to advance the discourse in a meaningful way.

As always, chatGPT was compensated for this input.